The rise of home recording has made it easier than ever to produce music, but capturing great vocals still challenges many creators. Whether you are a songwriter working from your bedroom or an engineer polishing demos, the pressure to deliver professional vocals is high. AI voice tools now offer a practical and creative way to elevate your recordings. These systems analyse your voice and apply intelligent corrections to pitch, timing, and tone while preserving your musical expression.

What Are AI Voice Tools and How Do They Improve Singing?

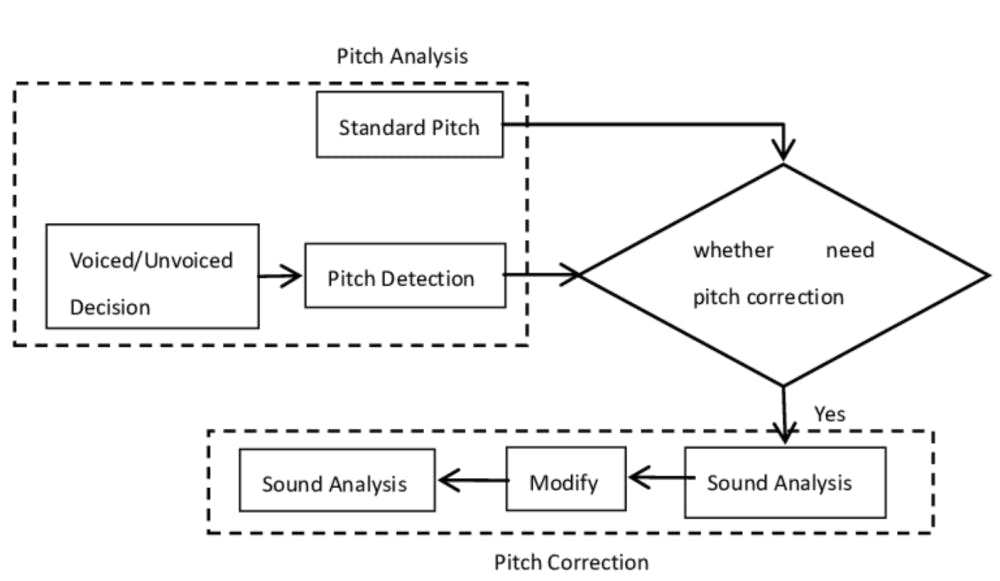

AI voice processors analyse your vocal recording to identify where improvements are needed. They interpret the details of your performance, such as pitch drift, timing against the beat, and tonal balance. When a note slips off key, the AI gently tunes it back to pitch. If phrases are too early or late, it realigns them to match the rhythm. The software can also adjust breathiness, harsh frequencies, or uneven loudness to achieve a more consistent sound.

Modern AI correction systems use deep learning to understand human singing rather than simply forcing notes into tune. This allows natural vibrato and phrasing to remain intact. Research by Johns Hopkins university supports this approach, showing that neural-network models outperform traditional auto-tune algorithms in pitch and tone accuracy.

These tools also expand creative options. They can shift the perceived gender, age, or texture of a voice, allowing artists to experiment with vocal identity. This flexibility makes AI voice tools valuable for both correction and sound design.

Common Singing Problems AI Can Fix

AI systems solve most technical vocal issues found in recordings. They can correct:

- Pitch drift and uneven vibrato

- Timing inconsistencies where vocals rush or drag

- Tonal harshness or imbalance between phrases

- Dynamic unevenness where some words are too quiet or too loud

They are particularly effective for tightening harmonies, aligning doubles, or improving demo vocals without heavy manual editing. For best results, the input should be clean and well-recorded. AI cannot fix distorted recordings, poor microphone placement, or performances lacking expression. It enhances what exists but cannot add emotional delivery.

How to Choose the Right AI Voice Tool

Choosing the right AI voice tool depends on your goals, workflow, and technical setup.

Define your purpose: If you need subtle tuning, choose a simple plugin that integrates directly into your DAW. For more advanced transformations, look for tools that offer voice modeling or creative character options.

Check compatibility: Make sure your DAW supports the plugin format (VST3, AU, or AAX). Some tools need strong CPUs or GPUs, while others rely on cloud servers.

Decide between local and cloud processing: Local plugins offer real-time correction without internet dependency. Cloud tools often feature more advanced AI models but may introduce latency or cost per use.

Consider pricing models: Options range from subscriptions to perpetual licenses or pay-per-use tokens. Choose based on how often you’ll use the tool.

Best Workflow for Using AI Voice Tools Effectively

The foundation of great AI correction is a clean recording. Record dry vocals without reverb or heavy effects, use a pop filter, and treat your room to minimize reflections. For step-by-step guidance, see Recording Vocals at Home: Pro Tips for Mic Placement & Room Treatment.

- Record clean, dry takes. Avoid compression, delay, or reverb during capture.

- Load your AI voice plugin. Let it analyse the full section for context.

- Select the right preset. Choose one that suits your genre or target sound and adjust the vocal range if needed.

- Refine the mix. After processing, use light EQ, compression, and ambience to help vocals sit naturally.

- Clean and polish. Learn more from How to Clean Vocal Tracks: Crossfades, Noise Removal & Pro Mixing Tips.

- Record separate harmonies. Avoid duplicating the same take with different presets to prevent an artificial sound.

AI works best as a precision tool inside a broader vocal chain. It should complement, not replace, good tracking, mic placement, and mixing skills.

Why AI Voice Correction Has Become Essential in Music Production

AI voice correction has grown into a trusted tool for vocal production across all levels of music creation. It allows producers to save takes that might otherwise be unusable and empowers artists to achieve a polished sound quickly. The focus is on refinement, not perfection.

Tools like SoundID VoiceAI help creators reach professional-level results without deep engineering experience or expensive studio sessions. By balancing technology with artistic control, these systems make vocal production more accessible and expressive.

FAQ: AI Voice Correction and Vocal Production

Can AI make me sound professional if I’m not a trained singer?

It can correct pitch and timing, but cannot replace expression or emotion. A good performance still matters.

Should I apply AI correction during or after recording?

After recording is best, as it gives you full control and prevents latency.

Can AI tools remove background noise?

Most focus on vocal tuning, not noise cleanup. Combine them with noise-removal tools for best results.

Will my vocals sound robotic?

Only if correction strength is overused. Subtle settings preserve natural vibrato and tone.

What are the system requirements?

Cloud systems depend on your internet connection; local plugins rely on CPU or GPU performance. Always verify compatibility before buying.

Where can I learn more about improving my recordings?

For better results at the source, explore Sonarworks articles: