The AISIS phenomenon didn’t just break the internet – it exposed how accessible AI voice cloning has become for music producers. When two UK producers released AI-generated Oasis tracks in April 2023, garnering 100,000 views overnight and Liam Gallagher’s approval, they demonstrated that bedroom producers can now create convincing vocal clones with weekend-level effort.

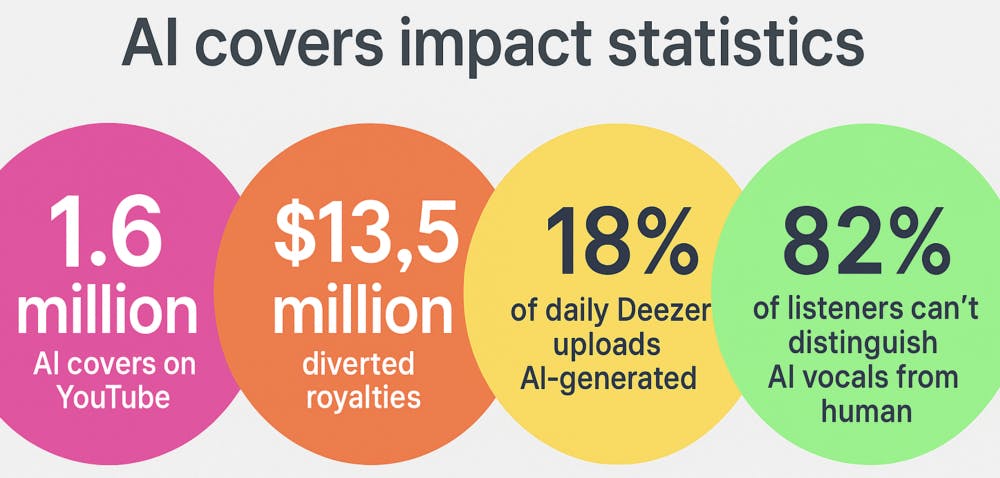

This accessibility has unleashed a flood of AI covers across streaming platforms, with YouTube hosting over 1.6 million AI-generated vocal performances and diverting an estimated $13.5 million in royalties from original artists.

The Technology Behind AI Music Cloning

Modern generative voice frameworks like So-VITS SVC and RVC have dramatically lowered barriers to entry. Producers need only ten minutes of vocal stems and basic GPU processing power to create convincing voice clones. The economic appeal is undeniable: zero studio costs, no upfront licensing fees, and immediate content creation capability.

Key factors driving adoption:

- TikTok’s algorithm rewards novel AI vocal content

- YouTube Shorts amplifies viral AI covers

- Zero initial licensing costs attract bedroom producers

- Nostalgia-driven content performs well on social platforms

Industry Expert Perspectives on AI Voice Technology

Economic Impact Analysis

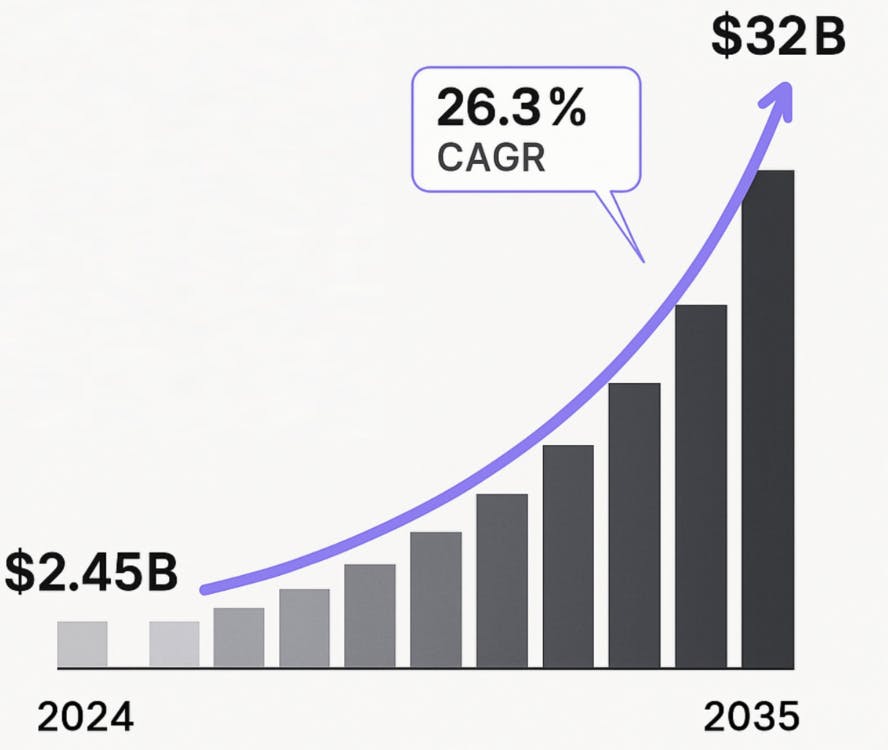

Will Page (former Spotify chief economist) warns that streaming services already process 120,000 new tracks daily. Adding unlimited AI-generated content could collapse royalty pools unless platforms implement dynamic, human-weighted payout systems.

Platform Strategy Considerations

Mark Mulligan (MIDiA Research) predicts platforms will need dedicated “AI lanes” – explicit tagging and discovery sections – to prevent human and algorithmic voices from blurring together in user experience.

Consumer Trust Factors

Tracy Gardner (Warner Music SVP) highlights risks to emerging demographics, particularly voice-controlled listening adoption among country and rock audiences over 40, where unlabeled AI clones could undermine fragile streaming trust.

The Psychology of AI Voice Perception

Cognitive research reveals fascinating listener behavior patterns with AI vocals. When synthetic voices achieve optimal humanity levels, listeners engage positively. However, overly perfect vocals trigger the “audio uncanny valley” – creating discomfort through unnatural smoothness.

Critical finding: 82% of listeners cannot reliably distinguish AI from human vocals in professionally mixed tracks, yet discovering AI origins often creates cognitive dissonance that erodes trust in artists and platforms.

Legal Landscape and Regulatory Response

The legal framework is rapidly evolving across multiple jurisdictions. The U.S. Copyright Office’s comprehensive 2025 report on AI and copyright law provides definitive guidance, confirming that purely AI-generated works cannot receive copyright protection while establishing frameworks for AI-assisted human creativity.

Current legislation:

- Tennessee’s ELVIS Act criminalizes unlicensed voice cloning

- EU opt-out letters from major labels warn AI developers

- Ongoing lawsuits against AI companies like Suno and Udio

- Proposed U.S. No AI FRAUD Act could revolutionize enforcement

Industry response: Labels simultaneously sue AI startups while negotiating licensing deals, suggesting eventual regulated coexistence rather than total prohibition.

Essential Producer Survival Guide

Legal Protection Strategies

Secure proper licensing for every cover version and obtain written consent for any voice usage beyond your own. Include AI usage clauses in all new contracts, including collaboration agreements.

Technology and Ethics

Choose transparent tools like properly licensed voice AI plugins that integrate directly with your DAW workflow. Watermark your original content with inaudible tracking hashes for copy-fraud protection.

Maintain transparency by crediting AI assistance in your releases – audiences increasingly reward honesty over deception.

Business Opportunities

Consider releasing official voice models with revenue-sharing terms, following successful examples like Grimes’ licensing approach. Stay informed about PRO alerts regarding changing legislation.

Revenue and Rights Management

Current streaming data shows 18% of daily Deezer uploads are AI-generated, with Spotify’s figures likely higher. This shift requires producers to understand:

- Mechanical licensing requirements for AI covers

- Voice rights and personality protections

- Revenue attribution in AI-assisted productions

- Platform-specific AI content policies

Future-Proofing Your Music Production

The intersection of AI technology and music creation will continue evolving at computational speed while cultural acceptance develops at human pace. Successful producers will:

Embrace controlled experimentation with licensed AI tools while maintaining artistic integrity

Build audience trust through transparent AI usage disclosure

Stay legally compliant as regulations rapidly develop

Focus on emotional connection since human reaction remains the ultimate measure of musical success

The Path Forward

Rather than viewing AI voice technology as a threat, forward-thinking producers can position themselves as early adopters of ethical AI integration. The technology offers new creative possibilities when approached with proper licensing, transparency, and respect for original artists’ rights.

The key lies in balancing innovation with integrity – using AI as a creative instrument rather than a replacement for human artistry. Success will depend on aligning ethical practices with economic opportunities while maintaining the authentic connection between music and audience.

Frequently Asked Questions: AI Voice Cloning for Music Producers

Q: Can I legally clone my own voice for backing vocals and harmonies?

A: Yes, using AI to clone your own voice is generally legal since you own the rights to your vocal likeness. However, document your process and maintain proof of ownership. This is particularly useful for creating consistent backing vocals across multiple tracks without additional recording sessions.

Q: What’s the realistic cost of professional AI voice cloning in 2025?

A: Professional solutions range from $29-199 monthly for plugin-based tools with built-in licensing, while enterprise-level voice modeling can cost $5,000-50,000 for custom development. Free open-source options exist but require significant technical expertise and offer no legal protection.

Q: How can I tell if someone has cloned my voice without permission?

A: Monitor major platforms using Google Alerts for your artist name, use audio fingerprinting services, and consider working with music recognition companies that specialize in AI detection. Watermarking your original vocals can also help prove ownership in legal disputes.

Q: Will streaming platforms start banning AI-generated vocal content?

A: Current industry trends suggest labeling requirements rather than outright bans. Platforms are more likely to implement AI content tags and separate discovery algorithms than completely prohibit synthetic vocals, given the volume already being uploaded daily.

Q: What’s the difference between AI voice cloning and traditional vocal processing?

A: Traditional processing (autotune, vocoders, harmonizers) modifies existing vocal performances, while AI cloning generates entirely new vocal content that mimics specific voice characteristics. The legal and ethical implications differ significantly between enhancement and generation.

Q: How do I protect my studio clients from unauthorized voice cloning?

A: Include AI protection clauses in client contracts, offer vocal watermarking services, educate clients about voice rights, and maintain detailed session documentation. Consider offering “voice insurance” as a premium service for high-profile clients.

Q: Can AI voice cloning replace session singers for commercial projects?

A: While technically possible, commercial use requires careful legal consideration. Union regulations, licensing agreements, and client preferences often favor human performers for major label releases. AI vocals work best for demos, independent releases, and specific creative applications.

Q: What should I know about international AI voice cloning laws?

A: Regulations vary dramatically by region. The EU emphasizes consent and data protection, while US states like Tennessee have specific criminal penalties. Always consult legal counsel for commercial projects, especially for international distribution or artist collaborations.

Continue learning: Explore our guides on ethical AI harmony creation, AI music legal frameworks, and future AI production trends.